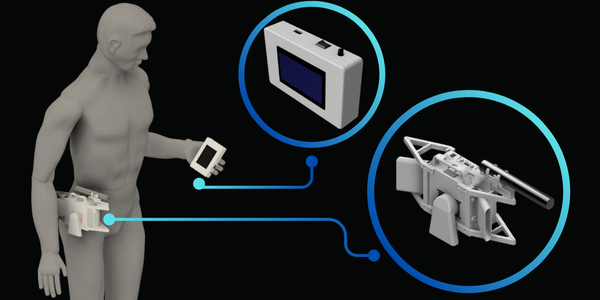

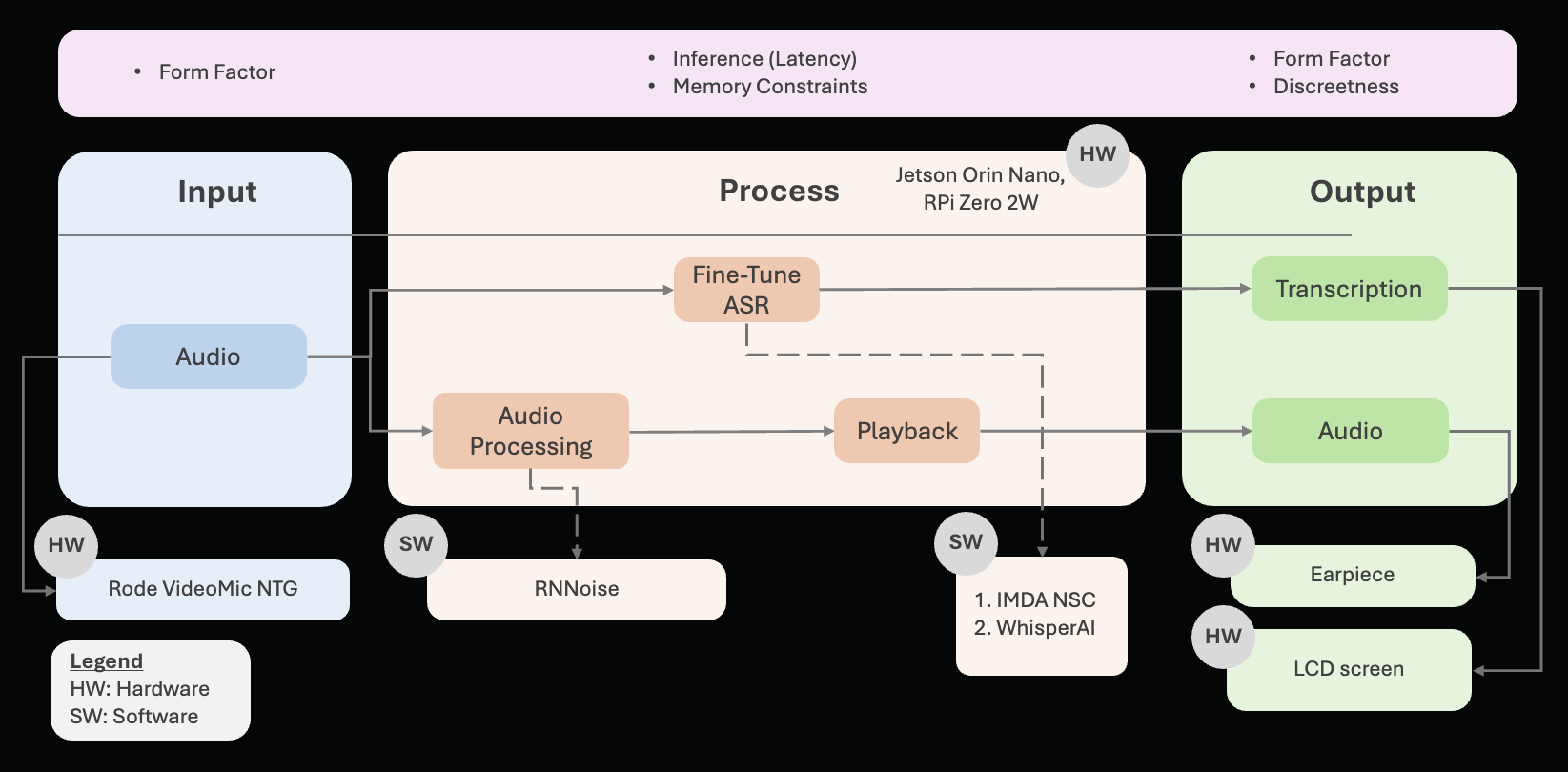

System Architecture

System Components

[HARDWARE]

- Shotgun Microphone: To effectively capture speech from as far as 6m (with natural ambient noise: 55dB).

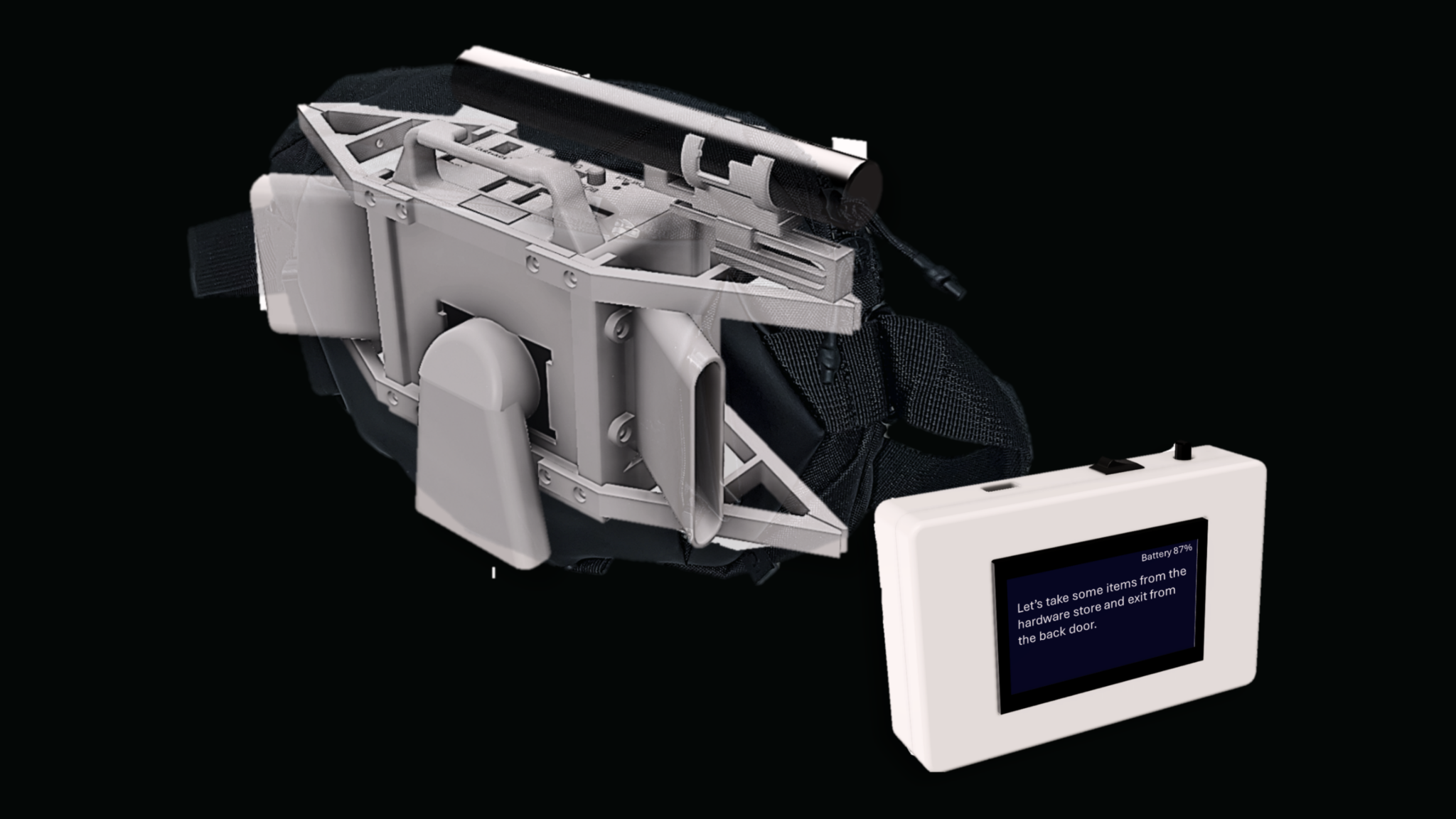

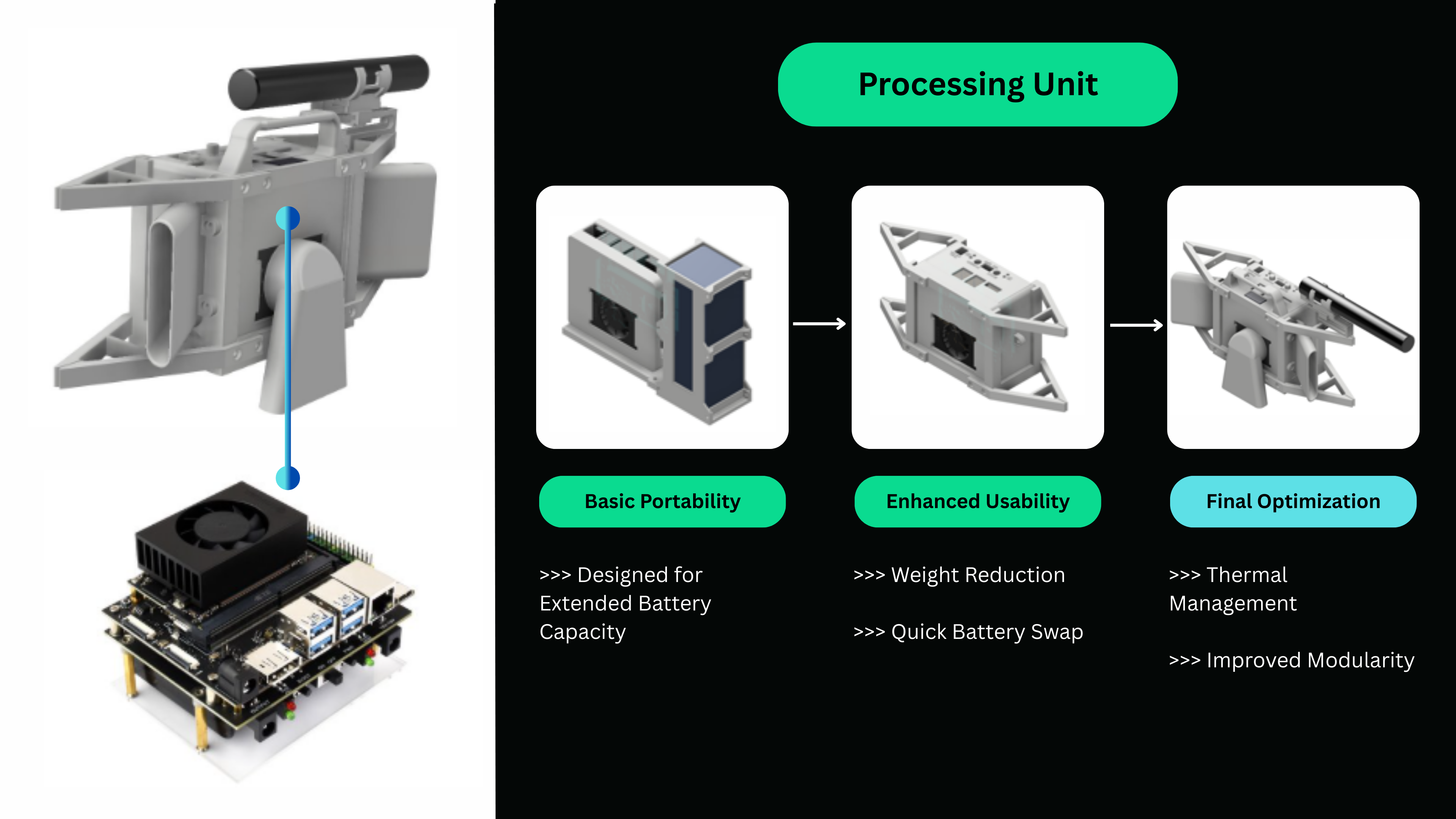

- Processor: Contain the computer (Jetson Orin Nano) and its battery for speech processing locally. It features a quick Swap (40s) using lithium-ion batteries (lifespan of 4.25hrs). It is also discreet at 0.936kg, inside of a small satchel bag.

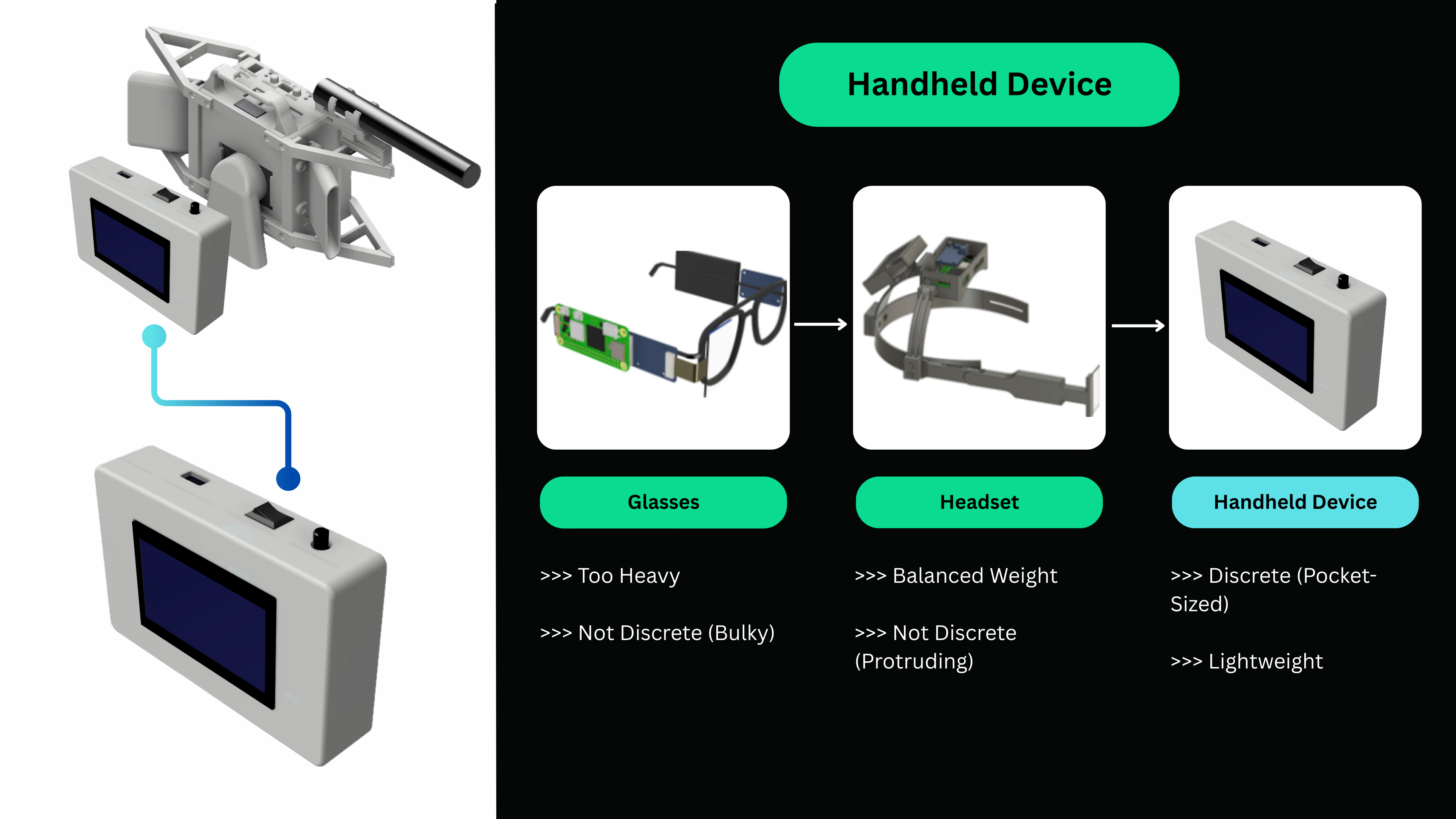

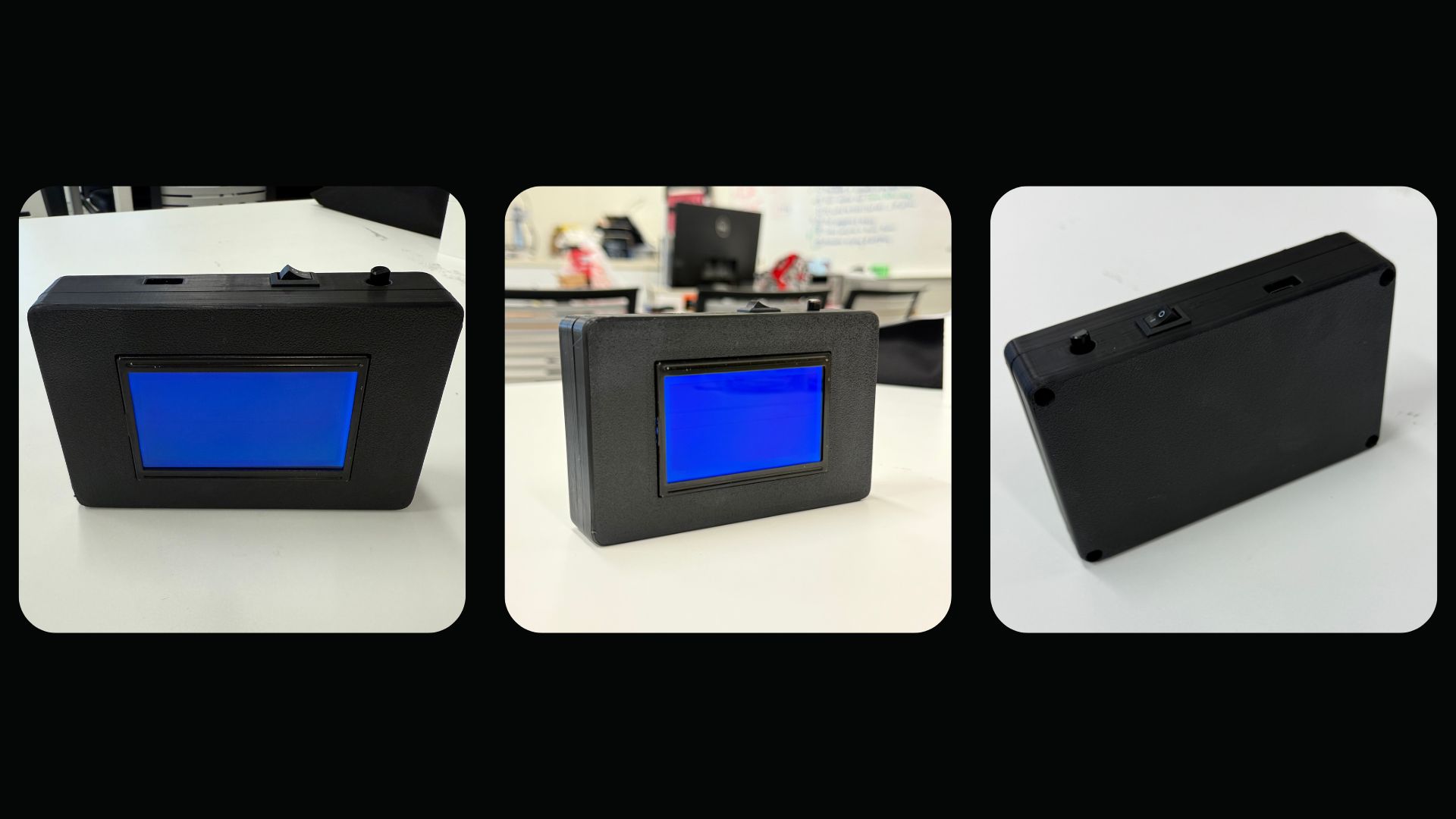

- Handheld LCD Device: Pocket-size device that enables the viewing of transcriptions.

[HARDWARE]

- Shotgun Microphone: To effectively capture speech from as far as 6m (with natural ambient noise: 55dB).

- Processor: Contain the computer (Jetson Orin Nano) and its battery for speech processing locally. It features a quick Swap (40s) using lithium-ion batteries (lifespan of 4.25hrs). It is also discreet at 0.936kg, inside of a small satchel bag.

- Handheld LCD Device: Pocket-size device that enables the viewing of transcriptions.

[SOFTWARE]

- Denoise Model: RNNoise --> Exceptional balance of real-time performance (lowest latency), highest perceptual quality, and efficient CPU operation (ideal for offline use).

- Transcription Model: WhisperAI --> Significantly outperformed other models in the market, with a word error rate of 8.9% for a video dataset.

[SOFTWARE]

- Denoise Model: RNNoise --> Exceptional balance of real-time performance (lowest latency), highest perceptual quality, and efficient CPU operation (ideal for offline use).

- Transcription Model: WhisperAI --> Significantly outperformed other models in the market, with a word error rate of 8.9% for a video dataset.

Hardware Development Process

Through multiple design iterations and rigorous component comparisons, we refined each part of the system—from microphones and processors to display and power—balancing performance, size, and efficiency. This process-driven approach enabled us to arrive at an optimized, field-ready hardware solution.

Software Development Process

The software development journey involved iterative testing, model benchmarking, and real-world scenario simulations. By comparing noise suppression, transcription, and communication modules, we fine-tuned each layer for speed, accuracy, and offline reliability—resulting in a robust, low-latency real-time system.

Noise Suppression: RNNoise

To identify the optimal noise suppression model for real-time, on-device use, the team evaluated a range of static and deep learning methods using key metrics: ΔSNR (Signal-to-Noise Ratio improvement), PESQ (perceptual audio quality), ΔSTOI (speech intelligibility), and latency.

Among all models, RNNoise demonstrated the best balance for wearable deployment:

- Lowest Latency: RNNoise (CPU) had the lowest latency at 0.755 ms, compared to 52–155 ms for other deep models.

- Highest Perceptual Quality: It scored the highest PESQ of 1.224, outperforming Facebook Denoiser (1.044) and DeepFilterNet (1.075).

- Good SNR Improvement: While Facebook Denoiser had the highest ΔSNR (+1.42 dB), RNNoise achieved a solid +0.90 dB with much faster performance.

- Efficient CPU Operation: RNNoise runs smoothly on CPU, whereas other models like Facebook Denoiser require GPU to achieve lower latency.

To identify the optimal noise suppression model for real-time, on-device use, the team evaluated a range of static and deep learning methods using key metrics: ΔSNR (Signal-to-Noise Ratio improvement), PESQ (perceptual audio quality), ΔSTOI (speech intelligibility), and latency.

Among all models, RNNoise demonstrated the best balance for wearable deployment:

- Lowest Latency: RNNoise (CPU) had the lowest latency at 0.755 ms, compared to 52–155 ms for other deep models.

- Highest Perceptual Quality: It scored the highest PESQ of 1.224, outperforming Facebook Denoiser (1.044) and DeepFilterNet (1.075).

- Good SNR Improvement: While Facebook Denoiser had the highest ΔSNR (+1.42 dB), RNNoise achieved a solid +0.90 dB with much faster performance.

- Efficient CPU Operation: RNNoise runs smoothly on CPU, whereas other models like Facebook Denoiser require GPU to achieve lower latency.

Automatic Speech Recognition: WhisperAI

To find the optimal ASR model for offline, on-device use, the team evaluated lightweight models using Normalized Word Error Rate (WER%) across five realistic datasets: Conversational AI, Phone Call, Meeting, Earnings Call, and Video.

Among all models, Whisper demonstrated the best performance for wearable deployment:

- Lowest Word Error Rate (WER): Whisper achieved the lowest WER across all datasets, e.g., 8.9% on Video and 9.7% on Earnings Call, compared to Kaldi (up to 69.9%) and wav2vec 2.0 (up to 36.3%).

- Fine-Tuning for Local Context: After fine-tuning on 530 hours of Singlish data, Whisper achieved a WER of 12.87%, showing a 66% improvement over Whisper-base.en and 93% improvement over Whisper-base.

To find the optimal ASR model for offline, on-device use, the team evaluated lightweight models using Normalized Word Error Rate (WER%) across five realistic datasets: Conversational AI, Phone Call, Meeting, Earnings Call, and Video.

Among all models, Whisper demonstrated the best performance for wearable deployment:

- Lowest Word Error Rate (WER): Whisper achieved the lowest WER across all datasets, e.g., 8.9% on Video and 9.7% on Earnings Call, compared to Kaldi (up to 69.9%) and wav2vec 2.0 (up to 36.3%).

- Fine-Tuning for Local Context: After fine-tuning on 530 hours of Singlish data, Whisper achieved a WER of 12.87%, showing a 66% improvement over Whisper-base.en and 93% improvement over Whisper-base.

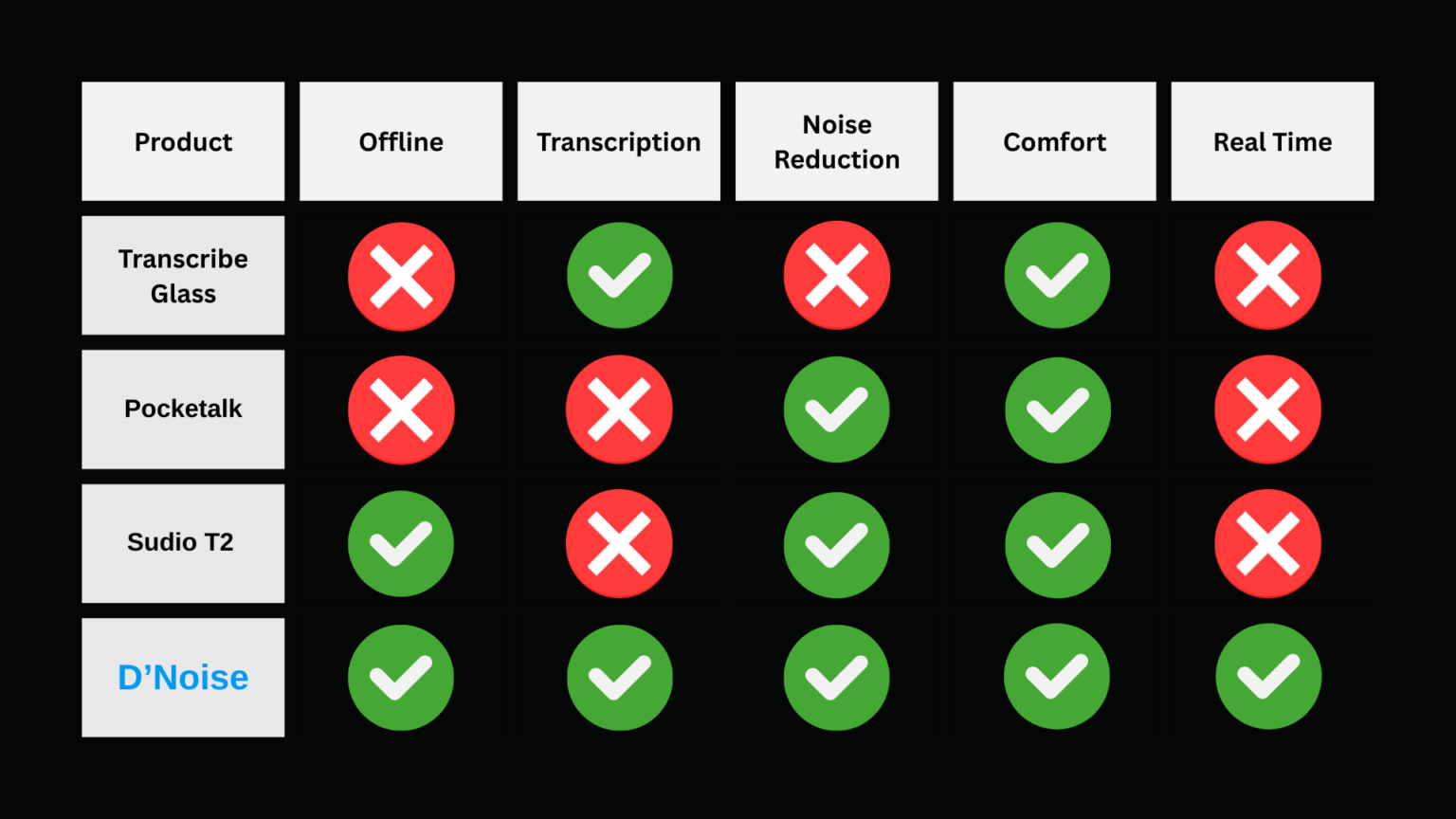

Comparison with Market

Measuring our product's capabilities against leading market alternatives to demonstrate superior performance and innovation.

Product Showcase

Acknowledgements

Special thanks to Professor Zhao Fang and Professor Tan Mei Chee, the team’s SUTD Capstone Mentors, for their consistent feedback and guidance. The team also appreciates the Capstone Office for its resources and support.

The team is grateful to the KLASS Engineering & Solutions team, especially Lu Zheng Hao (Project Manager) for coordinating meetings and offering valuable feedback, and to Nicholas Chan and Asif for their insightful technical advice. Terence Goh also provided key support during the proposal stage.

Thanks also to Professor Jihong for his helpful feedback during update sessions, and to Belinda Seet from the Center for Writing and Rhetoric for her assistance with presentations and consultations. The team appreciates the technical guidance from Professor Teo Tee Hui and Professor Joel Yang, which has been crucial to the project’s development.