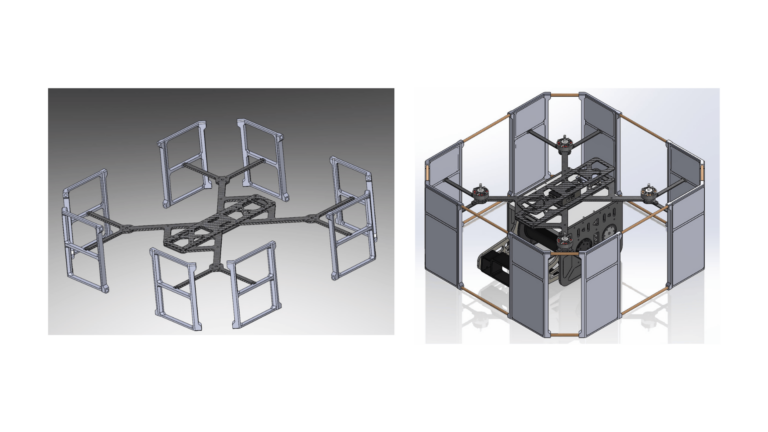

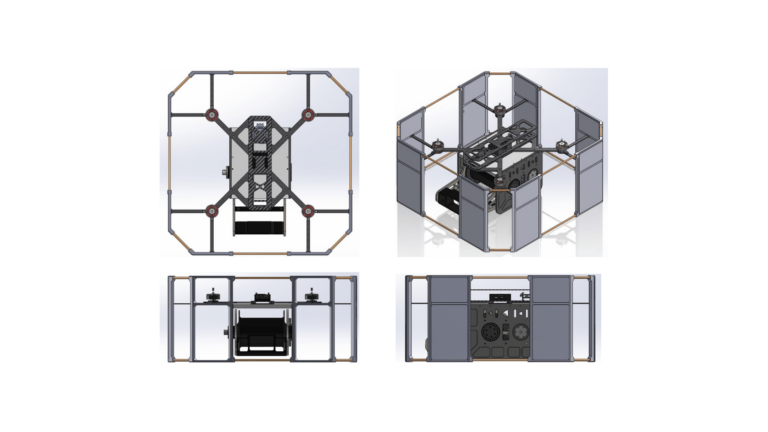

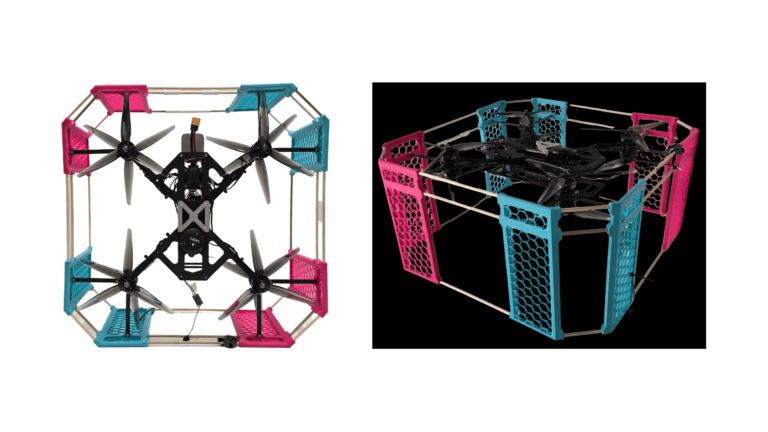

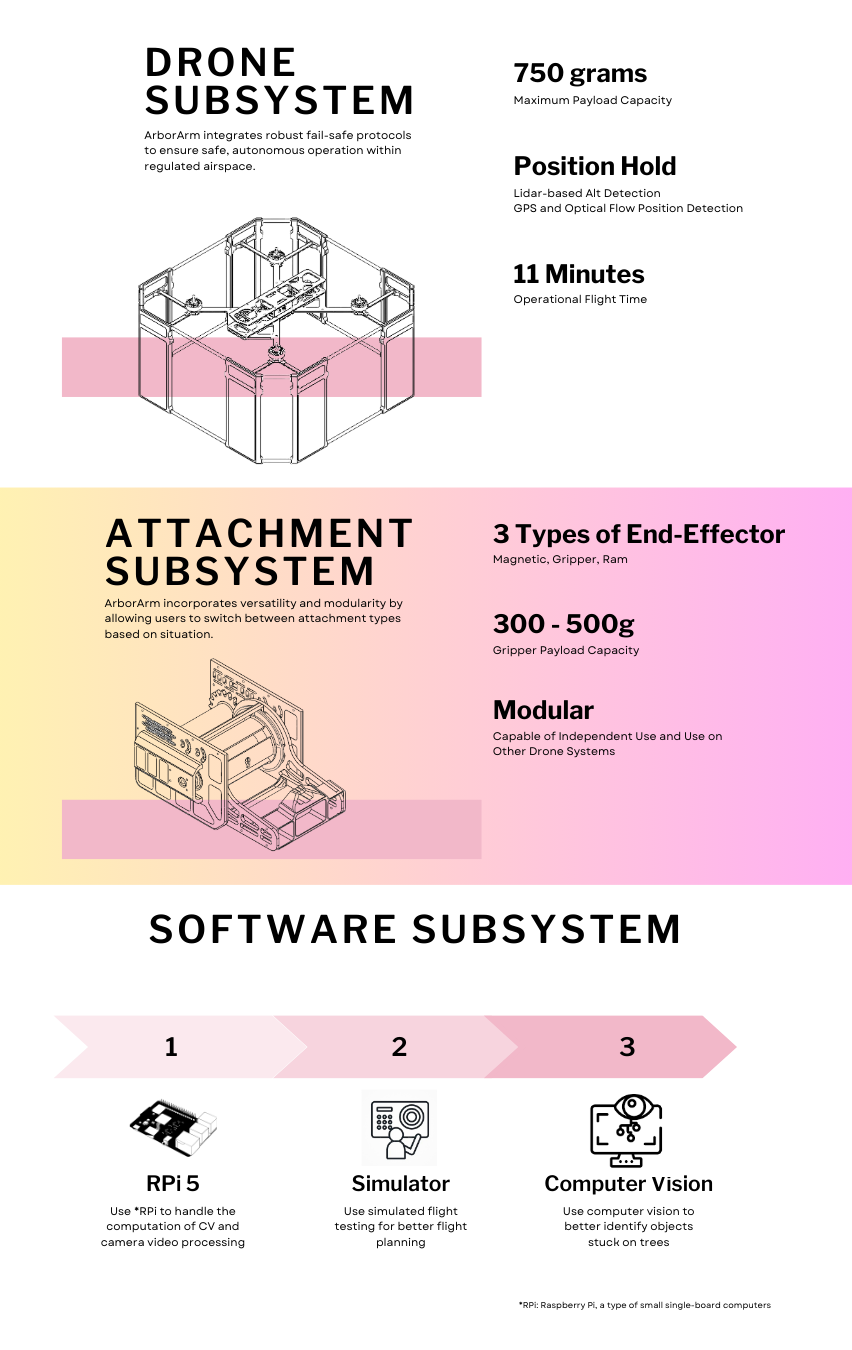

The unmanned aircraft (UA) design was adapted from previous iterations of the same sized UA. Our design needed to be light but sturdy to protect the UA and the attachment from impact. For this, we decided to use FDM 3D printing with a PLA-Aero filament by Bambu Lab which is specially designed for aircraft design. We also combined the cage and landing gear into one, which streamlined the design and production.

To join the cage+landing gear together, we used wooden dowels. The decision to use something that can breakaway is to make sure if there were to be an impact, these are the first things to break. These wooden dowels are easy and inexpensive to replace.

Going into the UA itself, we kept with the carbon fiber body. Made from cuts of a carbon fiber plate, it made the body sturdy to withstand flexing due to it carrying the weight of itself plus the attachment. It is also efficient at dissipating heat, which allows for our UA electronics to operate without damaging the UA body.

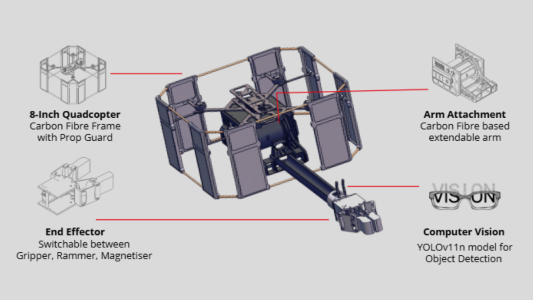

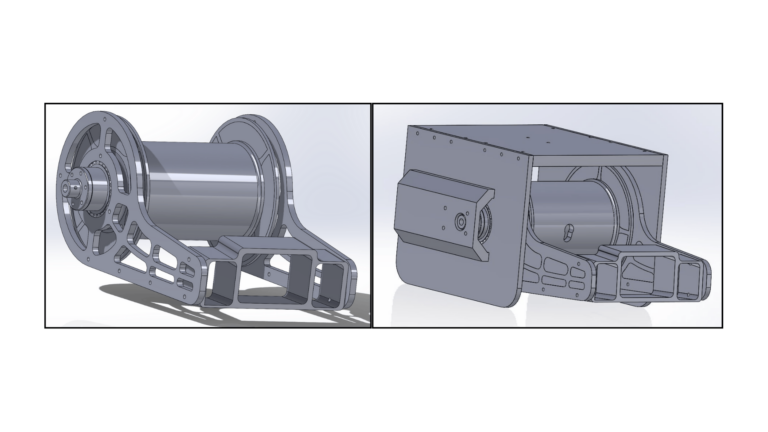

During the prototyping phase of the extendable arm, we initially considered using a linear actuator. However, we found that the linear actuator was too heavy due to its numerous metal and mechanical components. To address this issue, we conducted further research and discovered a flexible carbon fiber arm that can extend to form a rigid structure—an ideal solution for our object retrieval use case.

Based on this discovery, we designed a custom holder equipped with motor mounts that allow the carbon fiber arm to extend and retract smoothly. The top plate of the holder features strategically placed holes and indentations, providing slots for electrical components and secure mounting onto the drone.

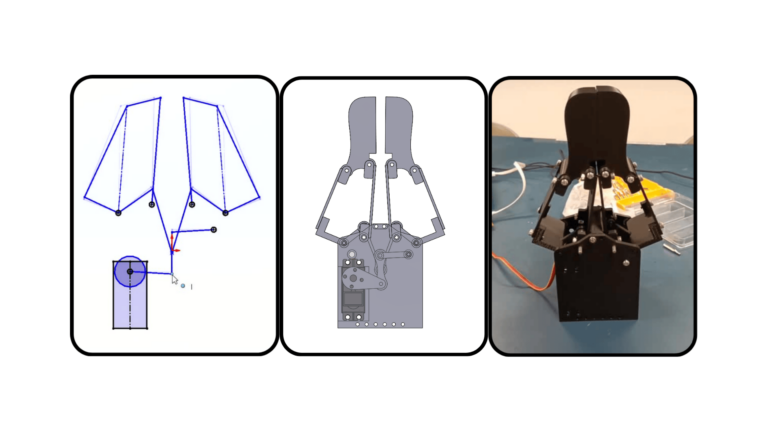

Moving forward, as for the claw attachment, we initially bought an off-the-shelf mechanical claw. However, we realized that the claw had a small surface area, which was insufficient to grip onto items effectively. Furthermore, we discovered that we can re-create our own claw by using a linear mechanism found online.

Firstly, we used solidworks to create a simple kinematics diagram to envision and finalize on the physics that the claw will operate on.

Next, we designed the individual components of the claw in CAD and assembled them in SolidWorks to assess their functionality. This was followed by several iterations of 3D printing, as some parts were either poorly fitting or too fragile, requiring adjustments and reprints.

After assembling the claw, we conducted testing on the claw by seeing whether it is able to retrieve different objects effectively.

Raspberry Pi Backend

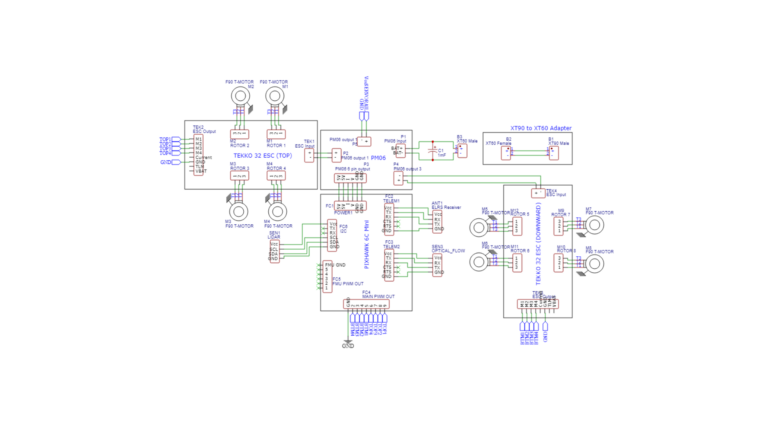

Raspberry Pi 5 (RPi 5) was used as a companion computer to help the flight controller for advanced computation. The system integrates a Raspberry Pi (RPi) with a drone’s flight controller (FC) using a micro XRCE-DDS bridge, enabling bidirectional communication via predefined ROS topics. This architecture facilitates feedback control to manage dynamic physical interactions, such as mitigating whiplash effects during object retrieval tasks. After research, the default PX4 flight stack demonstrated sufficient robustness for these scenarios, and modifications were implemented to extend the bridge’s functionality to an attachment arm subsystem.

Communication Architecture

The bridge initially served as the primary pathway for transmitting control signals between the FC and the RPi. A radio controller’s button-toggling commands (e.g., attachment arm roll or unroll, move the arm up and down movement) were intended to route through the FC to the RPi via ROS topics. The RPi would then execute custom scripts to actuate the attachment arm. However, as the flight controller’s firmware was flashed and calibrated previously, it posed limitations on the FC that prevented the transmission of custom messages, necessitating a redesign. The onboard cameras are capable of live streaming via VLC, broadcasting the feed over the local area network (LAN). This setup offers users seamless access to the camera stream, requiring only a connection to the same network with IP <IFORGOTTHEIP> to view the live feed.

Signal Handling and Power Constraints

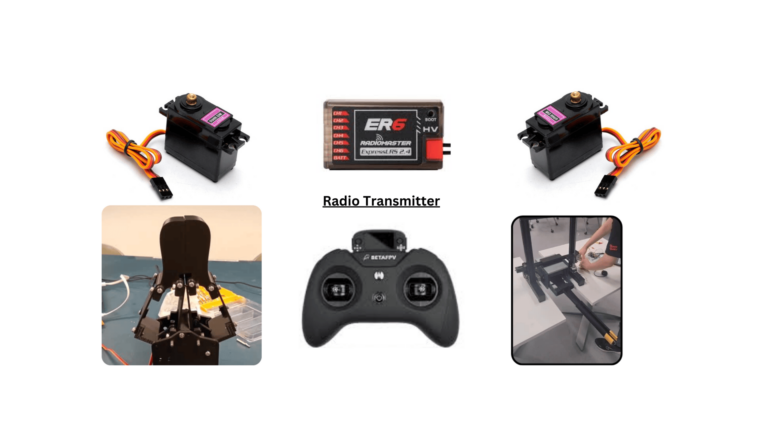

To bypass the FC’s firmware restrictions, an ExpressLRS (ELRS) receiver was

integrated directly with the RPi via GPIO pins. This allowed the RPi to interpret radio controller signals without relying on the XRCE-DDS bridge. While effective, this approach increased power consumption significantly due to simultaneous operation of the RPi, ELRS receiver, and onboard cameras.

Computer Vision

To enable robust object detection for the attachment and retrieval process, a YOLOv11n model was deployed on the Raspberry Pi 5. This lightweight neural network was selected for its balance between inference speed and accuracy, making it suitable for deployment on edge devices with limited computational resources.

Model Training and Dataset

The YOLOv11n model was trained using a custom dataset composed of images capturing the target object from various distances, lighting conditions, and angles. The training was performed on a high-performance local machine before exporting the model to the Raspberry Pi.

To improve robustness in outdoor environments, data augmentation techniques such as random rotation, scaling, brightness shifts, and noise injection were applied during training. The final trained model achieved a mean average precision (mAP@0.5) of 85.2% on the validation set.

Deployment and Integration

The exported .pt model was converted and optimized using Ultralytics’ YOLOv11 framework and deployed via NCNN Runtime on the Raspberry Pi 5. The vision system interfaces with the ROS2 stack to relay detection results (bounding box coordinates and confidence scores) to the onboard control system, which uses this information to guide the UAV for retrieval.

The object detection module was containerized with Docker for ease of deployment

and version control on the Pi. The YOLOv11n model is initialized upon startup and continuously processes frames from the onboard camera. Detected objects are filtered based on confidence thresholds (>0.7), and tracking is performed using a lightweight SORT (Simple Online and Realtime Tracking) algorithm.

Performance Evaluation

Inference Time: ~8 ms per frame (on Raspberry Pi 5)

Detection Accuracy: 85.2% mAP@0.5 on validation dataset

Power Consumption: Approx. 2.1W under full load