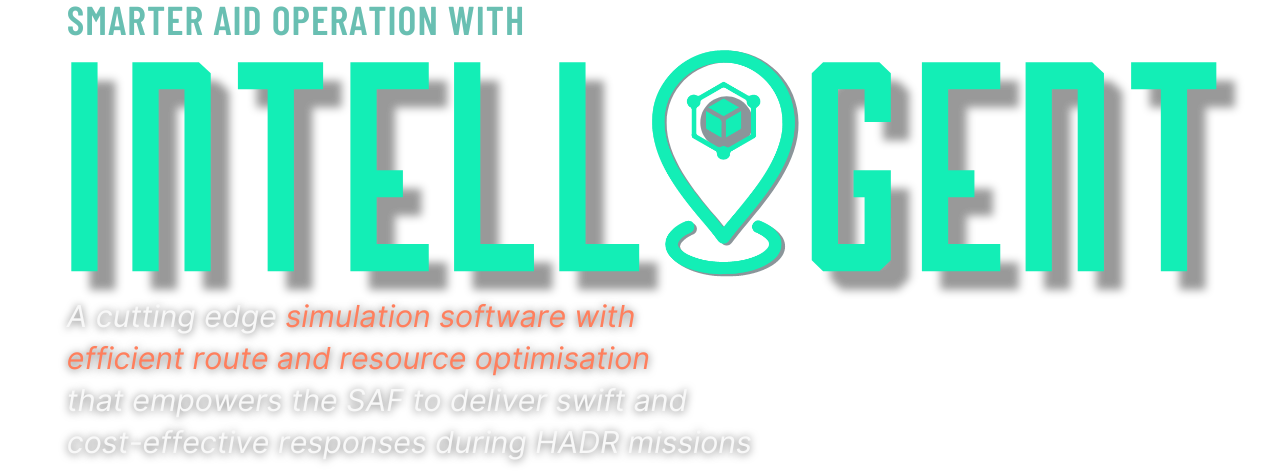

Background

Currently, SAF's mission planning efficiency is hindered by complex processes and overwhelming information, impeding swift responses to dynamic situations critical in life-saving missions where time is paramount.

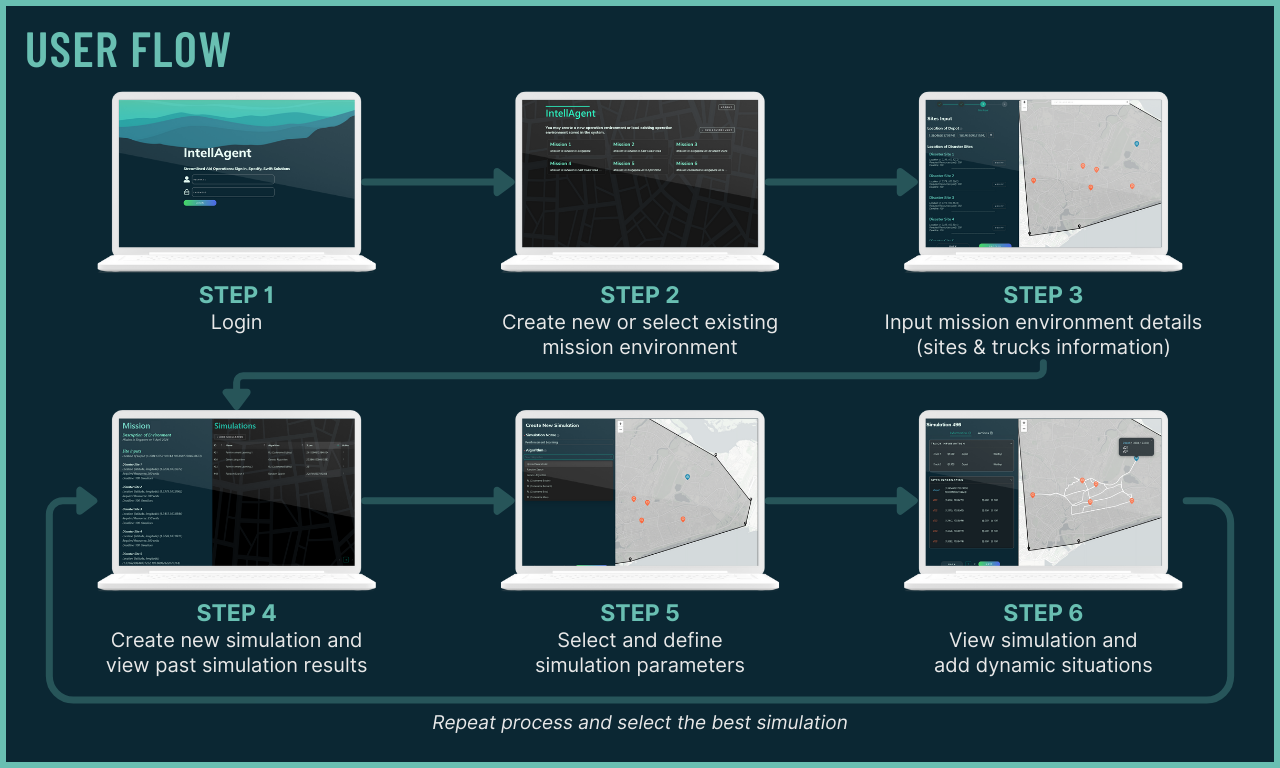

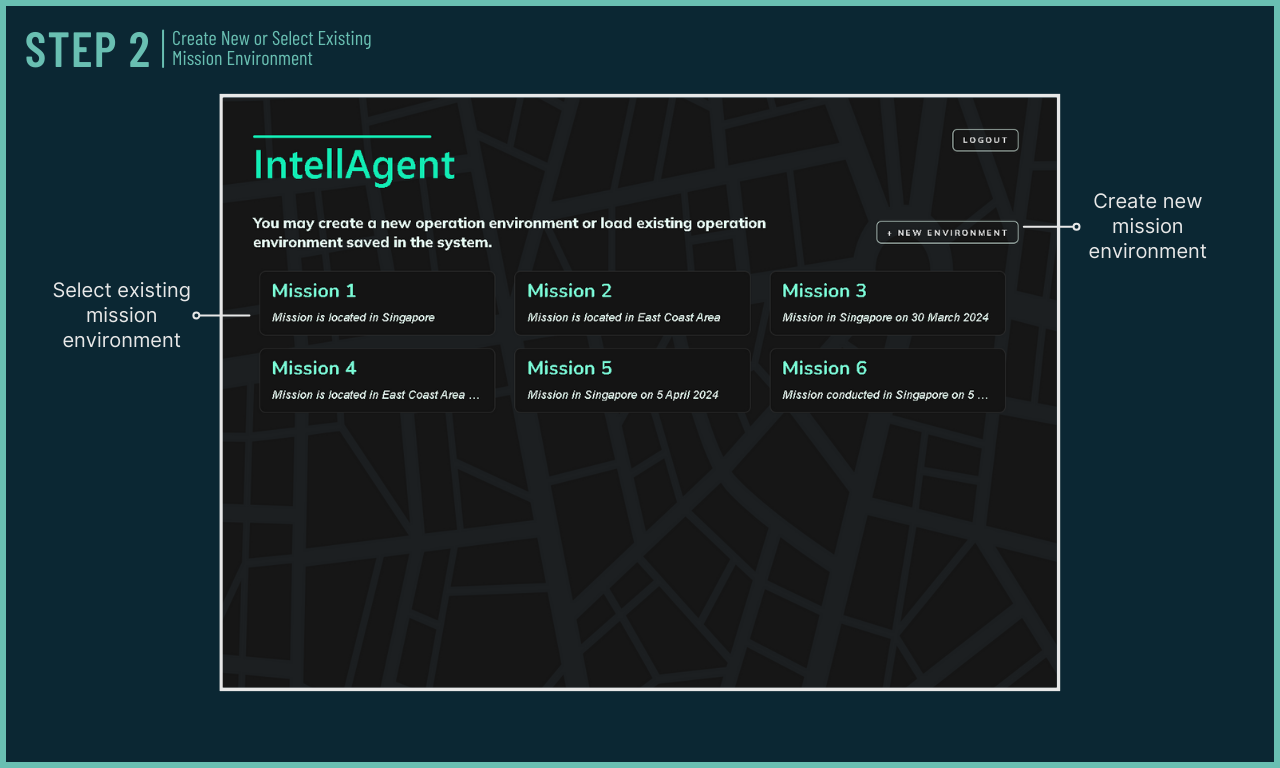

Learn how IntellAgent works!

Key Features

Simple Data Pipeline

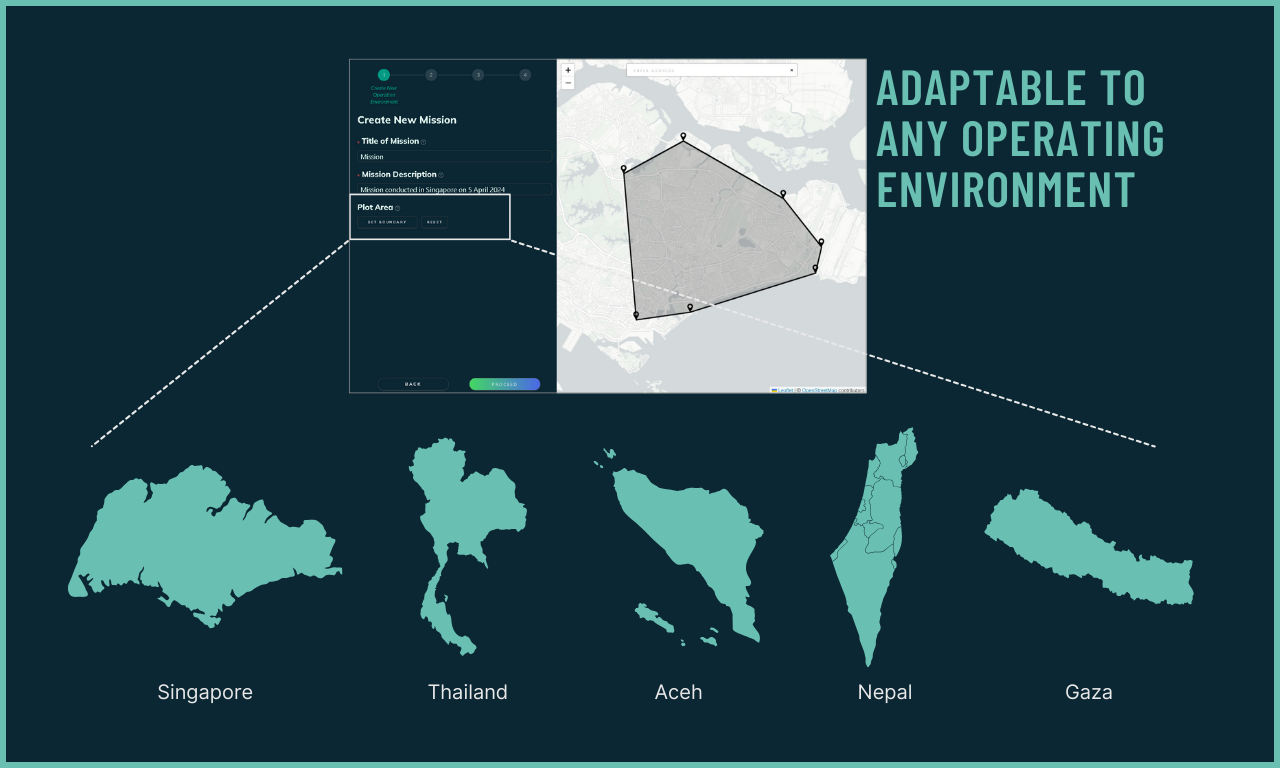

Mission planners have the flexibility of setting boundaries to automatically obtain environment data even in unfamiliar environments. This is supported by IntellAgent's quick data aggregation from various sources such as OpenStreetMap.

Smart Route Optimisation

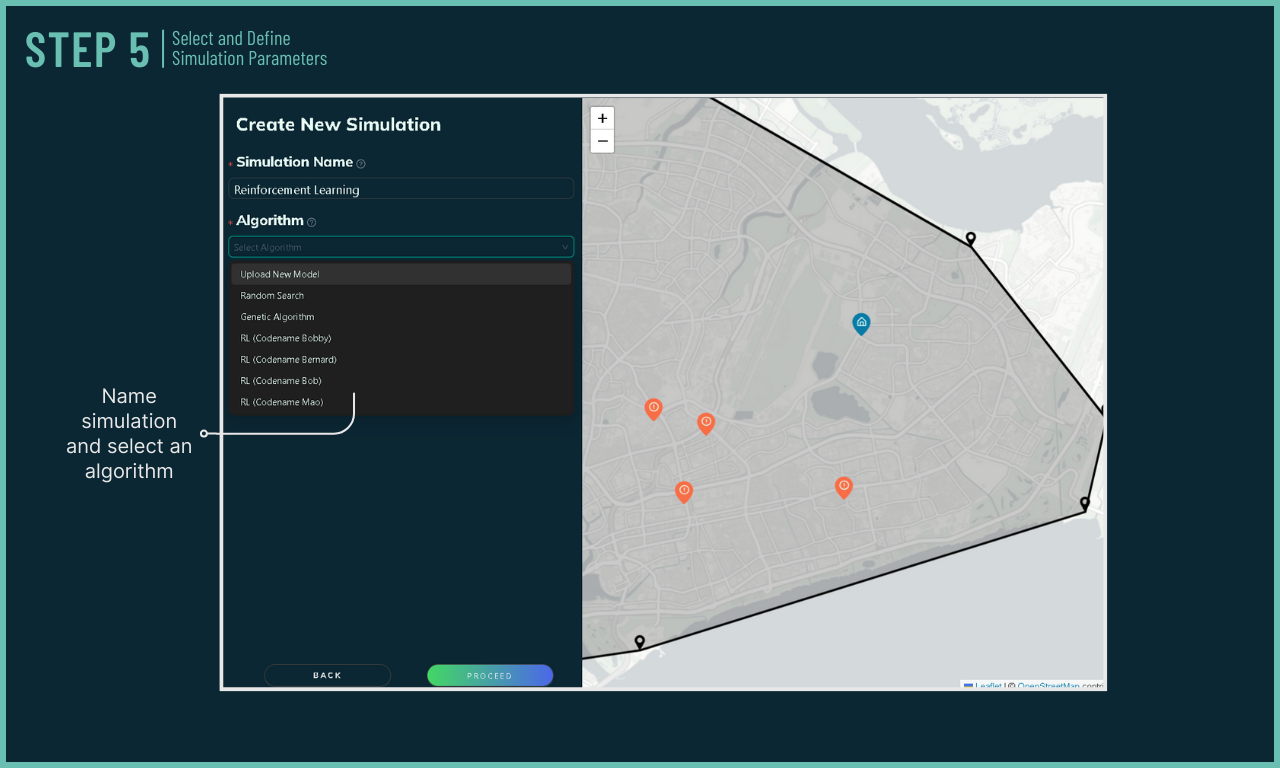

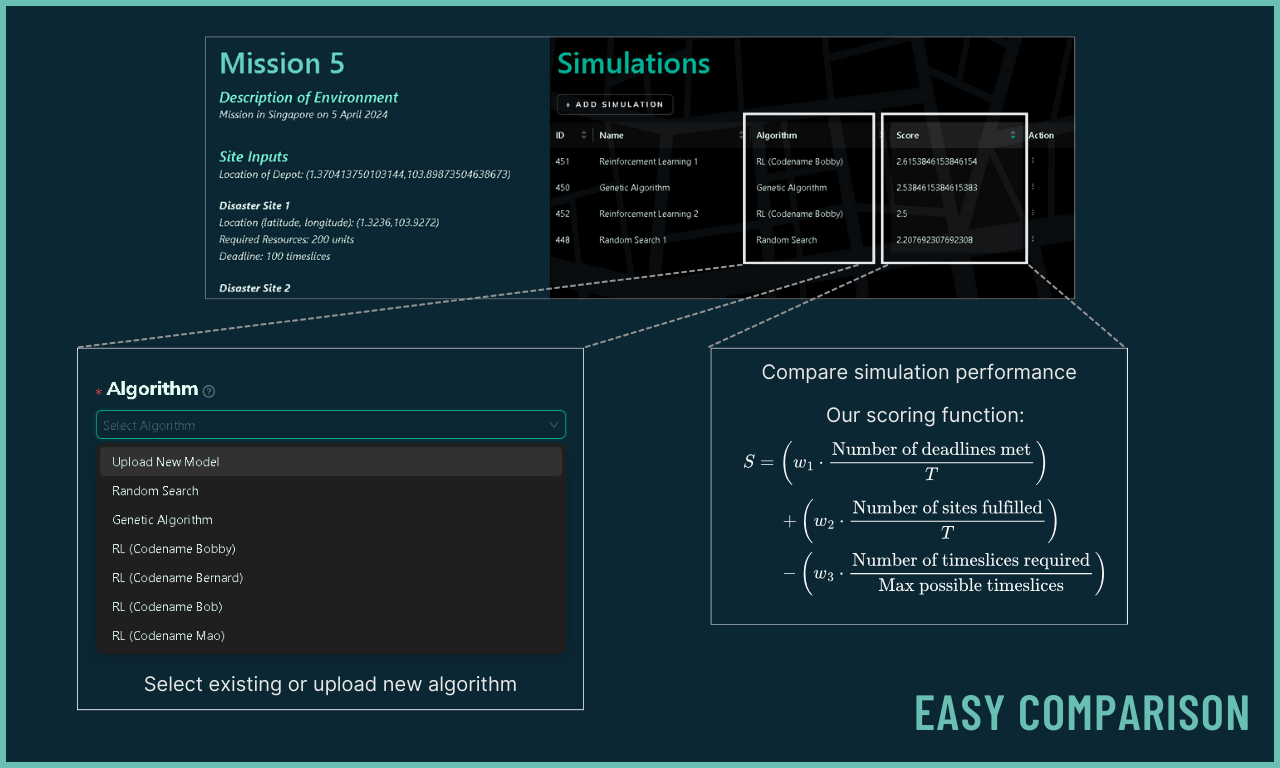

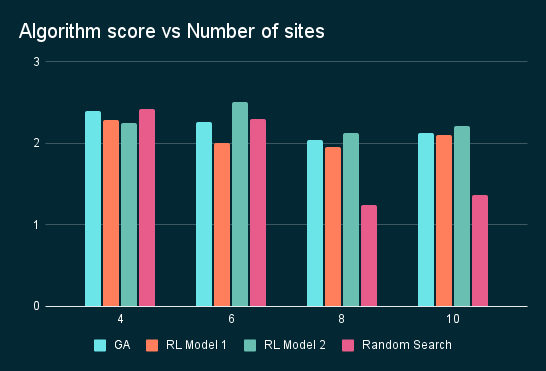

IntellAgent offers a wide range of algorithms, such as Reinforcement Learning (RL) and Genetic Algorithms (GA). The performance of the simulations are then evaluated with a score for quick comparison.

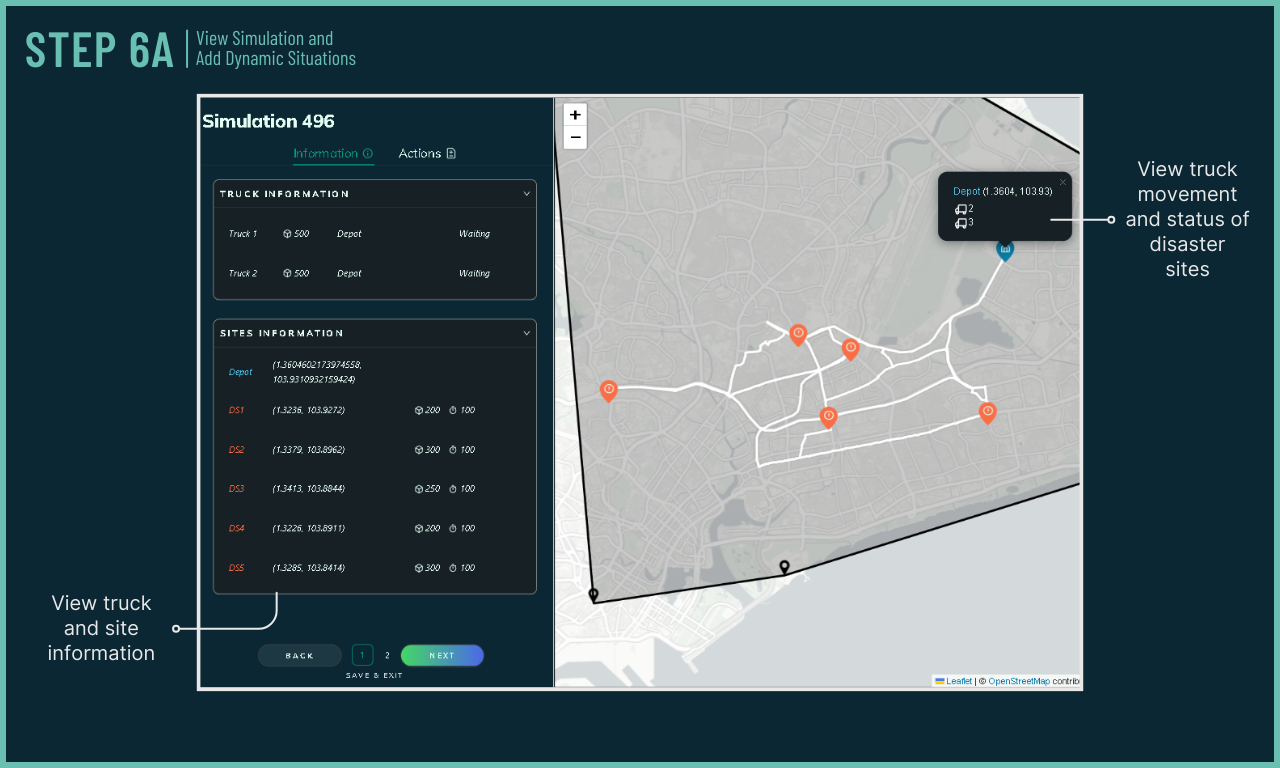

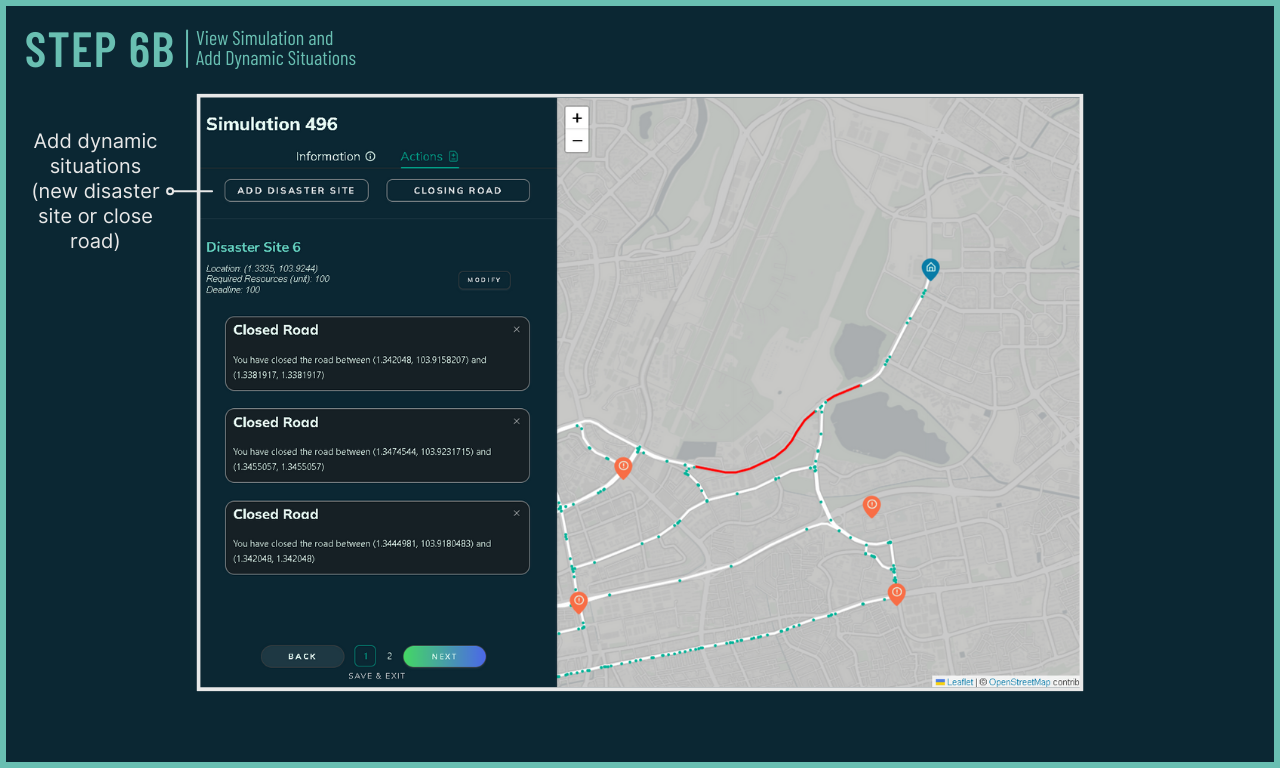

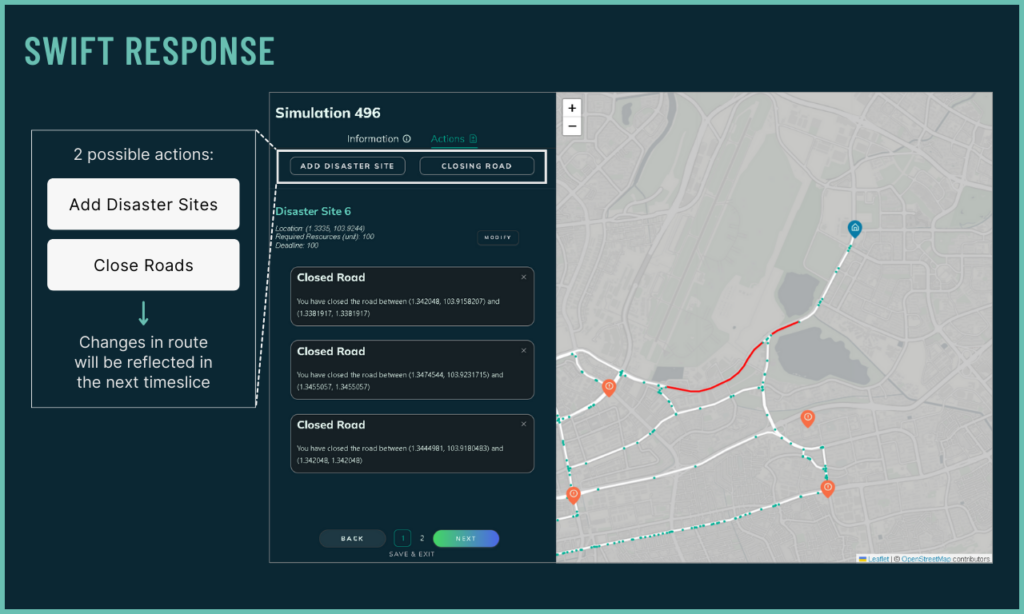

Responsive to Dynamic Situation

Mission planners can easily alter simulation environment during runtime to simulate dynamic actions. Upon altering the simulation's environment, IntellAgent adapts and recalculates a new solution.

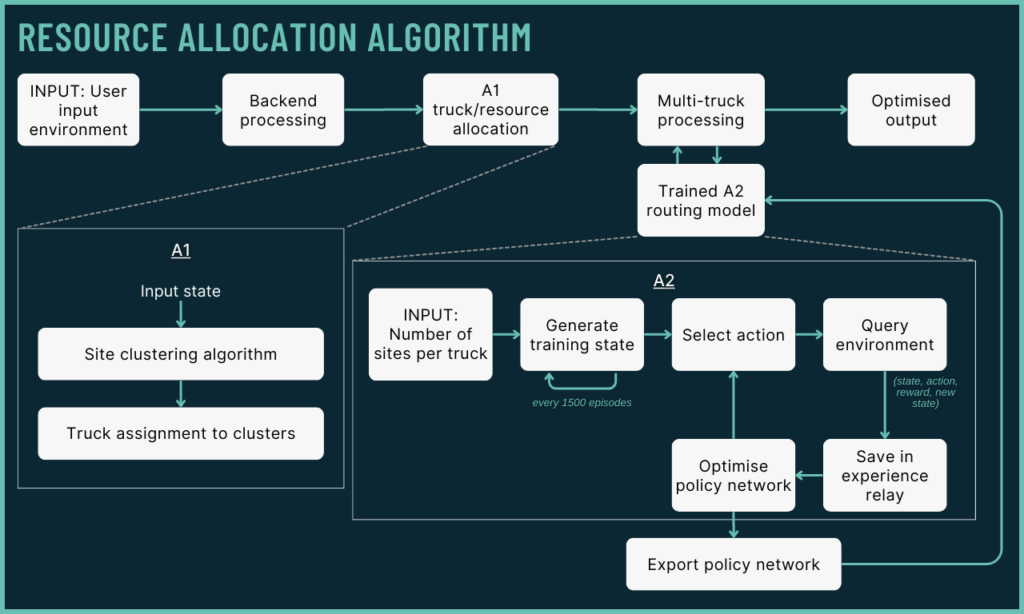

A Hierarchical Approach to Tackle Complex Problems

By breaking down the problem into smaller subproblems, we can optimise at a granular level. First, we assign sites to each truck, based on site locations and required resources. Then, for each truck, we solve the routing problem balancing resource delivery, deadlines, and travel costs.

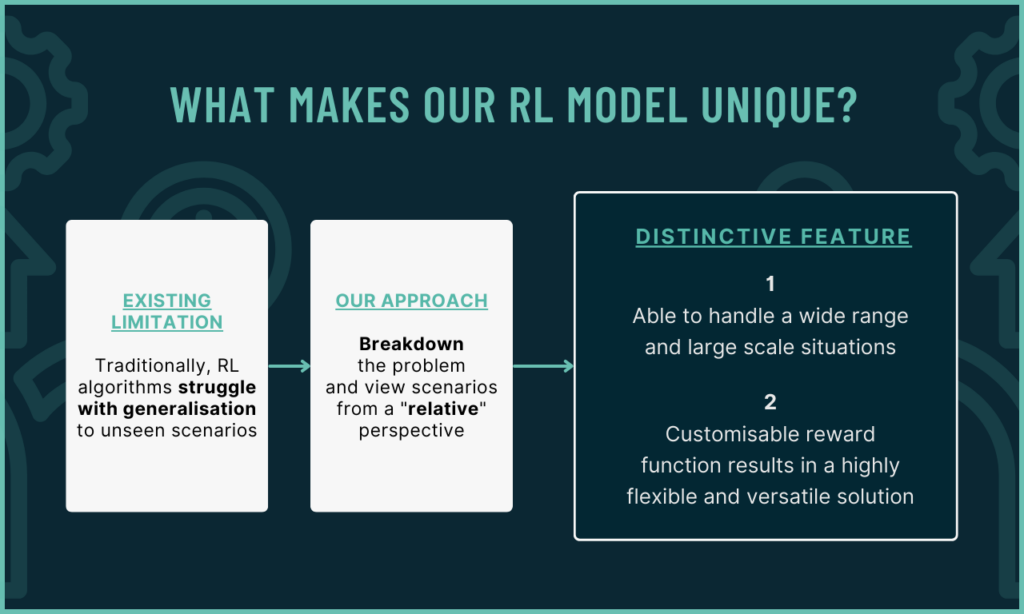

A Novel Perspective for Reinforcement Learning

Our algorithm abstracts the problem and translates it into values relative to the agent's baseline. Agents can handle a wide range of situations beyond predetermined environments. Furthermore, customisable reward function allows the agent to prioritise different objectives, resulting in a highly flexible and versatile solution.

Key Insights

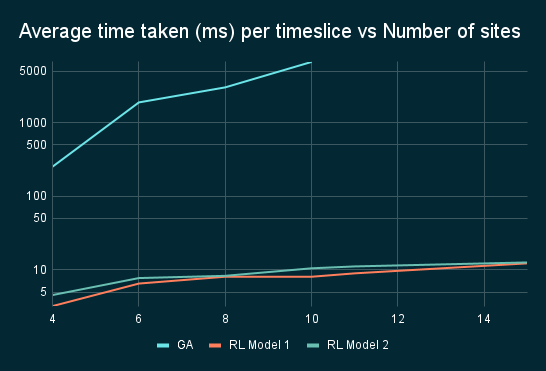

Time taken and memory requirement for GA grows exponentially with number of sites while RL inference remains quick. Our novel approach to RL allows us to calculate optimal solutions quickly even with large number of disaster sites.

While performance of GA and RL are relatively similar, computational resources and time required to perform GA grows exponentially, making it less idealistic.

In collaboration with

We extend our heartfelt gratitude to our industry mentors from DSTA, Marcus and Zhengyi, for their unwavering support and our SUTD mentor, Prof. Zhao Na, for her guidance. Much thanks to Dr. Bernard Tan and the Capstone office for their assistance.