Problem

Verbal communication is a form of interaction that Singapore Police Force (SPF) officers have to partake in while interacting with the members of the public (MOPs) as part of their duties. In the public feedback reports of 2019 to 2022, the SPF received an average of 500 yearly complaints from the MOPs. These numbers stem from the negative experiences that MOPs may have during their interactions with relevant SPF personnel. This conflicts with the SPF’s goal to build trust with the MOPs in order to better protect the public.

Although training through roleplaying, simulations and exercises is considered an effective measure to prevent such situations by building experience and intuition for trainee patrol officers; effective physical training typically requires officers to spend time traveling to the location it is held at and can be difficult to schedule when considering a patrol officer’s shift hours. Furthermore, scaling up training to accommodate more trainees also significantly increases the physical resources required such as logistics and booking training locations, making it costly to hold large training exercise sessions frequently.

There is a demand for training methods which maximize the resources utilized and help prepare frontline officers to handle empathetic interactions during tense and uncertain situations.

Our Solution

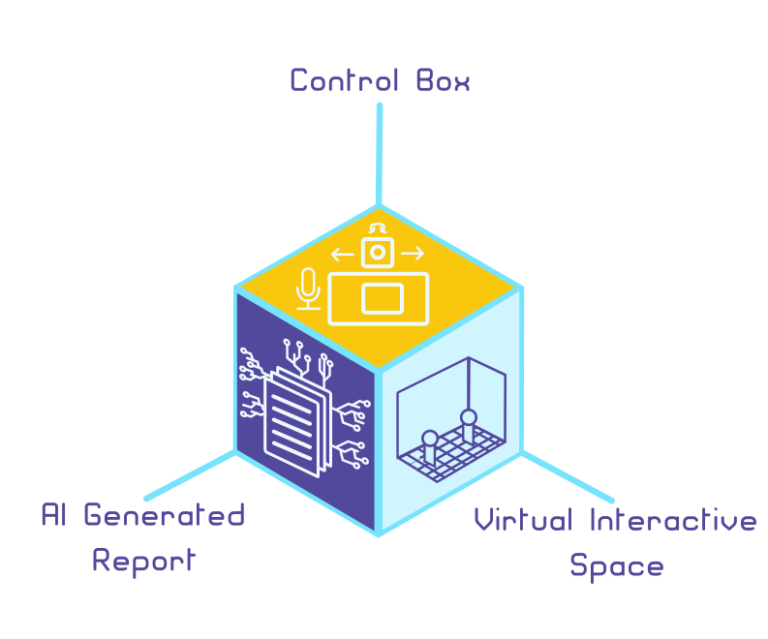

The E.V.A. (Emotion. Virtual Space. AI) System is a blend of hardware engineering, artificial intelligence and software engineering that seeks to augment verbal interaction training by providing a Virtual Interactive Space for trainers and organizations to conduct training remotely anytime, from anywhere.

With just a control box and a laptop, E.V.A. can be set up easily, allowing trainees to quickly join their remote training sessions. Facial mapping onto avatars helps to keep interactions as realistic as possible, conveying facial expressions and emotions accurately between trainers and trainees. After the training session, the Emotional Artificial Intelligence models were used to generate performance reports that assist trainers in providing feedback through a comprehensive analysis of the trainees’ performance in the training scenarios.

In incorporating the interactive aspects of physical training into a more time-efficient remote training regime through E.V.A., we can help deliver more efficient and effective training to frontline officers, to better prepare them for unexpected situations.

User Interaction Framework

In System E.V.A, user interaction is centered around the virtual space, which was implemented using Unreal Engine. The users, namely trainees and trainers, use Control Boxes in the training process to collect facial and voice data for virtual interactions in the virtual space, as well as for emotional classification through the Emotional AI model.

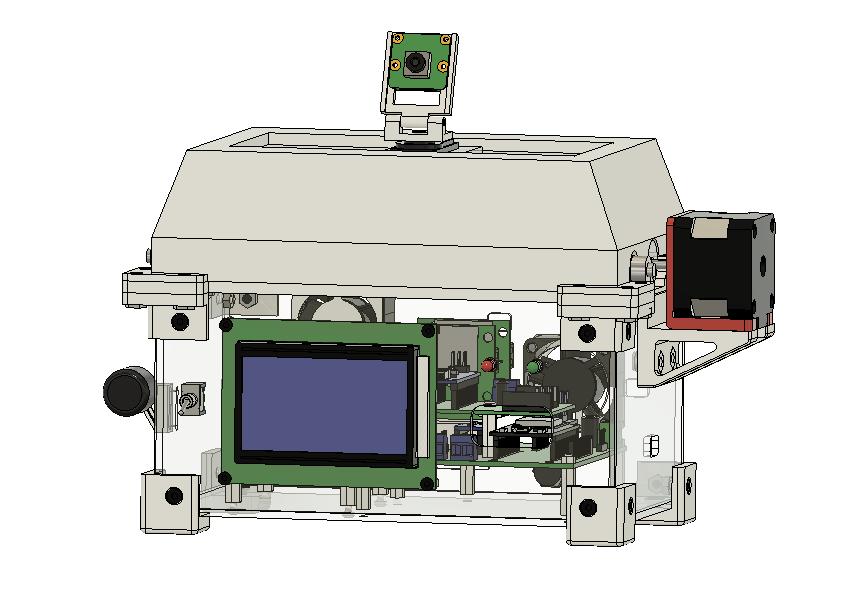

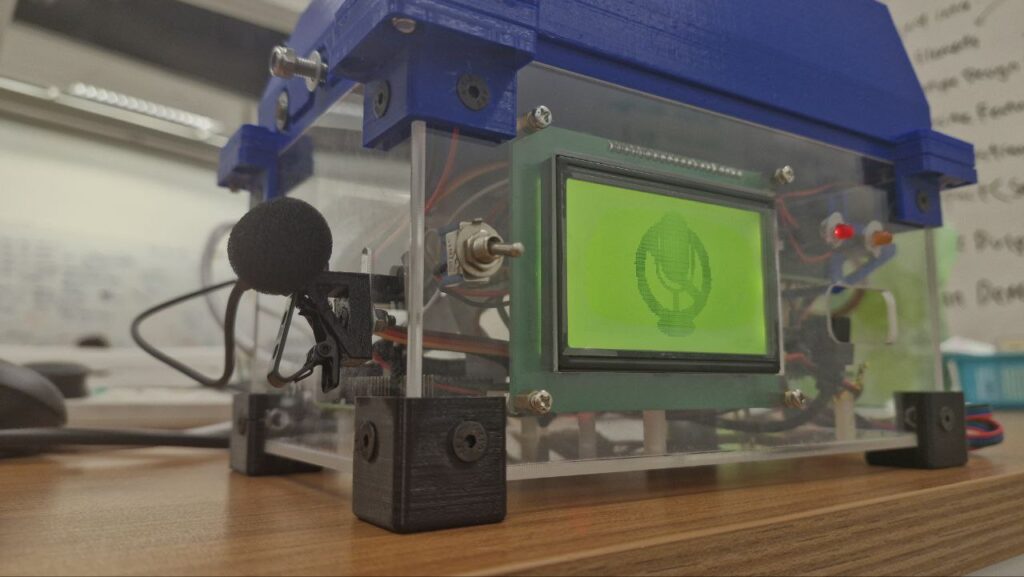

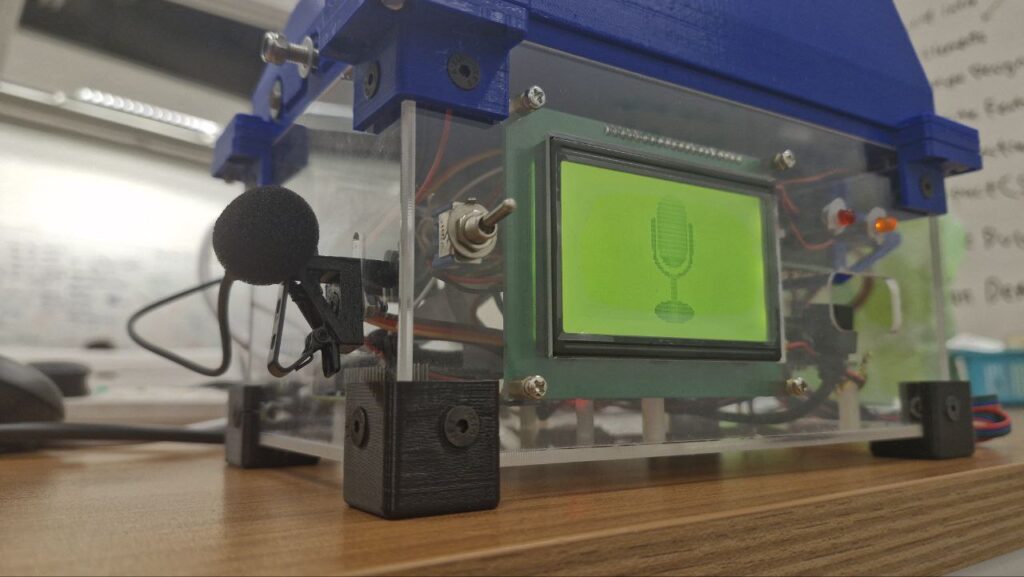

Control Box

The face tracking mechanism of the control box is necessary to capture the user’s facial features accurately during operations, which requires moving the camera to keep the user’s face centered in the camera frame.

The control box implements 2 degree-of-freedom control of the camera: linear movement along the x-axis and rotation about the z-axis. This allows the camera to accommodate side-to-side movement of the user’s head during the training session, while keeping the control box compact to ensure portability.

The microphone of the control box serves to capture and segment the user’s audio for use by E.V.A.’s Emotional AI report generator. Microphone recording is operated by a flip switch on the left-hand side of the box, with the LCD display indicating the current state of the microphone.

The Control Box stores both audio recordings and images taken during the session on a share folder located in the computer connected to it.

Virtual Interactive Space

Through the use of the Raspberry Pi Camera Module on the Control Box, the facial motion capture (mocap) data of the user is recorded and converted to blendshapes data, which is processed into a format which the LiveLink plugin on Unreal Engine can recognise.

Unreal Engine will then use this data to animate the user’s Metahuman avatar, projecting facial expressions from the user onto the avatar’s face. This allows realistic facial expressions to be presented in the virtual space.

The Unreal Engine also provides the potential to create various environments within a virtual landscape, which enables the replication of dynamic scenarios from real life.

The flexibility offered by the engine allows a variety of training scenarios to be prepared, equipping trainees with the intuition and experience required to tackle a wider range of real-world scenarios.

AI Performance Report

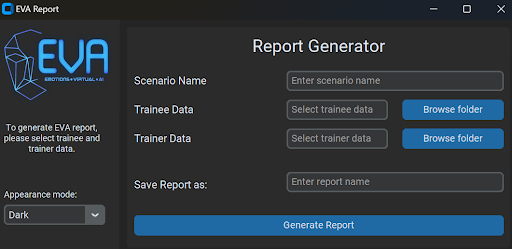

After training, users may open the report generator as an executable, through which they can enter details for the training as well as attach folders containing the data recorded by the control box. The data is then run through our Emotional AI models to generate a PDF Report. More details about each model can be found in the other tabs.

The Report Generator comes in two versions, one for individuals and one for trainee-trainer pairs. The individual-oriented version allows trainees to quickly generate reports for their own reference, while the trainee-trainer pair version generates a more detailed report that accounts for both trainee-trainer interactions during training.

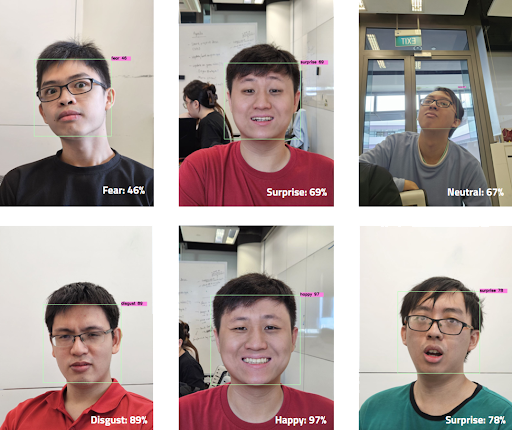

Our Facial Expression Recognition (FER) Model identifies the emotion of the user through extracting their facial features from the pictures captured by the control box during the training session.

These features then are then used to classify the emotion being expressed through the user’s facial expression into one of 7 emotions : Anger, Disgust, Fear, Happiness, Neutral, Sadness and Surprise. The emotion with the highest probability percentage is selected as the “identified” emotion.

Our Sentiment Analysis Model first requires the segmented audio recorded by the control box to be run through a speech-to-text algorithm. The converted text is then run through our Distilled Robustly Optimized BERT Approach (RoBERTa) Model to identify the emotional sentiment behind the transcribed speech.

The model then fits the sentiment of the speech to one of 7 emotions: Anger, Disgust, Fear, Happiness, Neutral, Sadness and Surprise. The emotion with the highest probability is selected as the “identified” emotion.