Lim Kwan Hui

Rashmi Kumar

Bernard Tan

Singapore’s growing tourism industry has led to an increase in tourist influx, including a surge in non-English speaking visitors. This presents challenges in ensuring a smooth and enjoyable visitor experience, as language barriers can create communication gaps between locals and international tourists. Additionally, navigating Singlish—Singapore’s unique blend of English, local slang, and dialects—can be difficult, even for tourists who have some knowledge of English, further complicating interactions and understanding. Addressing these communication challenges is essential to enhancing the overall experience for visitors from around the world.

How might we best allow security personnel to communicate with foreigners effectively and conveniently?

With careful consideration of the key requirements, CrossTalk Secure was developed to support offline translation for a selection of Southeast Asian languages and those commonly spoken in Singapore, facilitating seamless conversation between a pair of users conversing comfortably in their own languages without jeopardising their privacy.

Through extensive research into various components, we have designed an integrated solution that addresses key market gaps in existing products—specifically, the lack of secure offline translation for Southeast Asian languages. By leveraging AI edge computing, our approach eliminates the need for internet connectivity, ensuring secure and reliable translations for users in the region.

This approach presents a unique challenge: balancing powerful computational requirements with portability. To achieve optimal performance, we carefully considered hardware components such as battery capacity and cooling, along with the AI model pipeline.

When activated, the device delivers real-time transcription of spoken dialogue, text-to-text translation, and audio output of translated text in eight supported languages: English, Mandarin Chinese, Malay, Hindi, Indonesian, Tagalog, Thai, and Vietnamese.

Its split-screen design enables both users to view the transcribed conversation in their chosen language simultaneously, enhancing comprehension and ensuring accurate communication.

Our speech-to-speech translation system uses a step-by-step approach, where each part of the process is handled by a specialised model.

![]()

First, the system detects when someone is speaking. Then, it detects the language and transcribes their speech into text. Next, the text is broken into sentences and translated into the target language by sentence. Finally, the translated text is spoken aloud using a voice synthesis model when there is a lull in the conversation. This structured approach ensures lower latency and flexibility compared to a single all-in-one model.

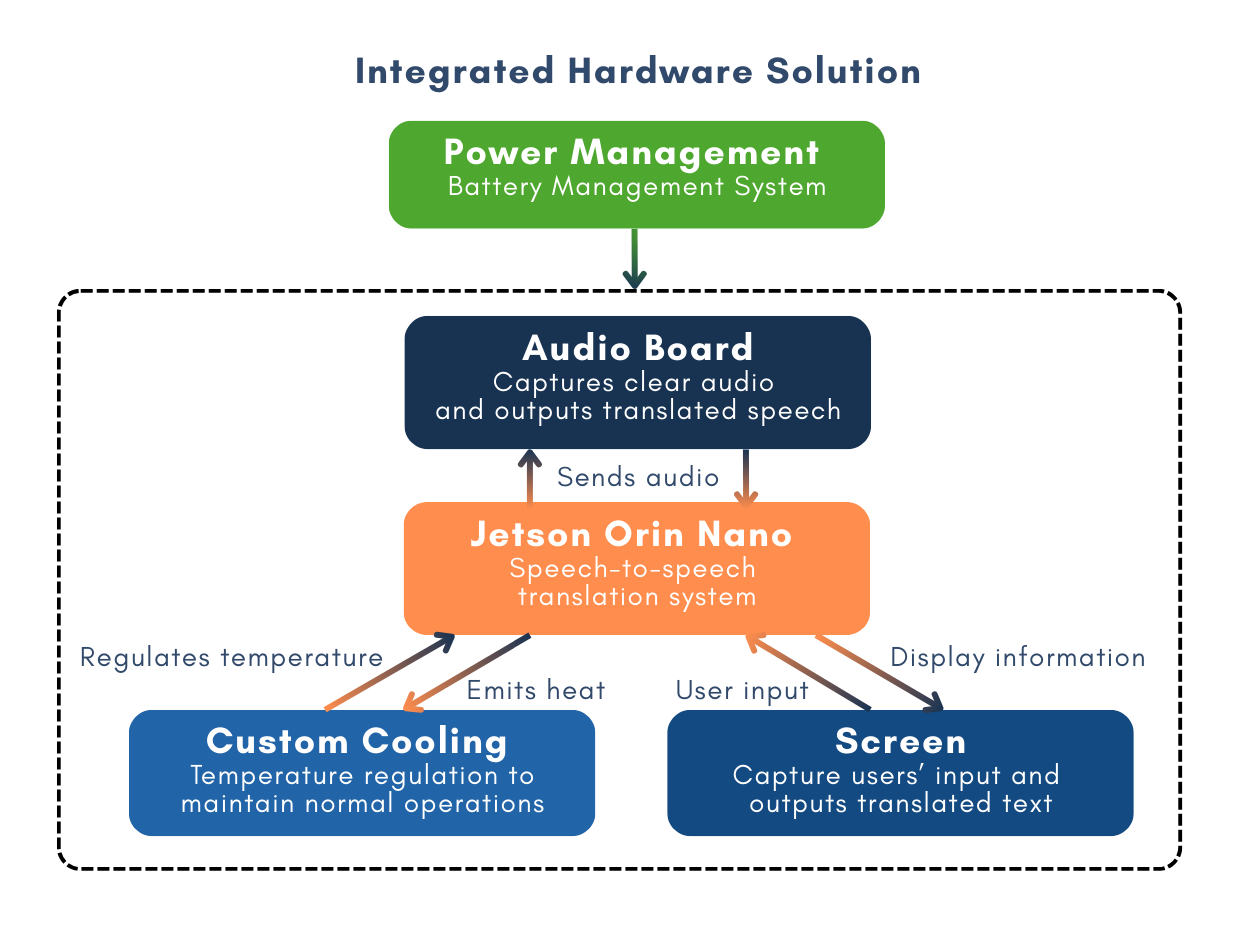

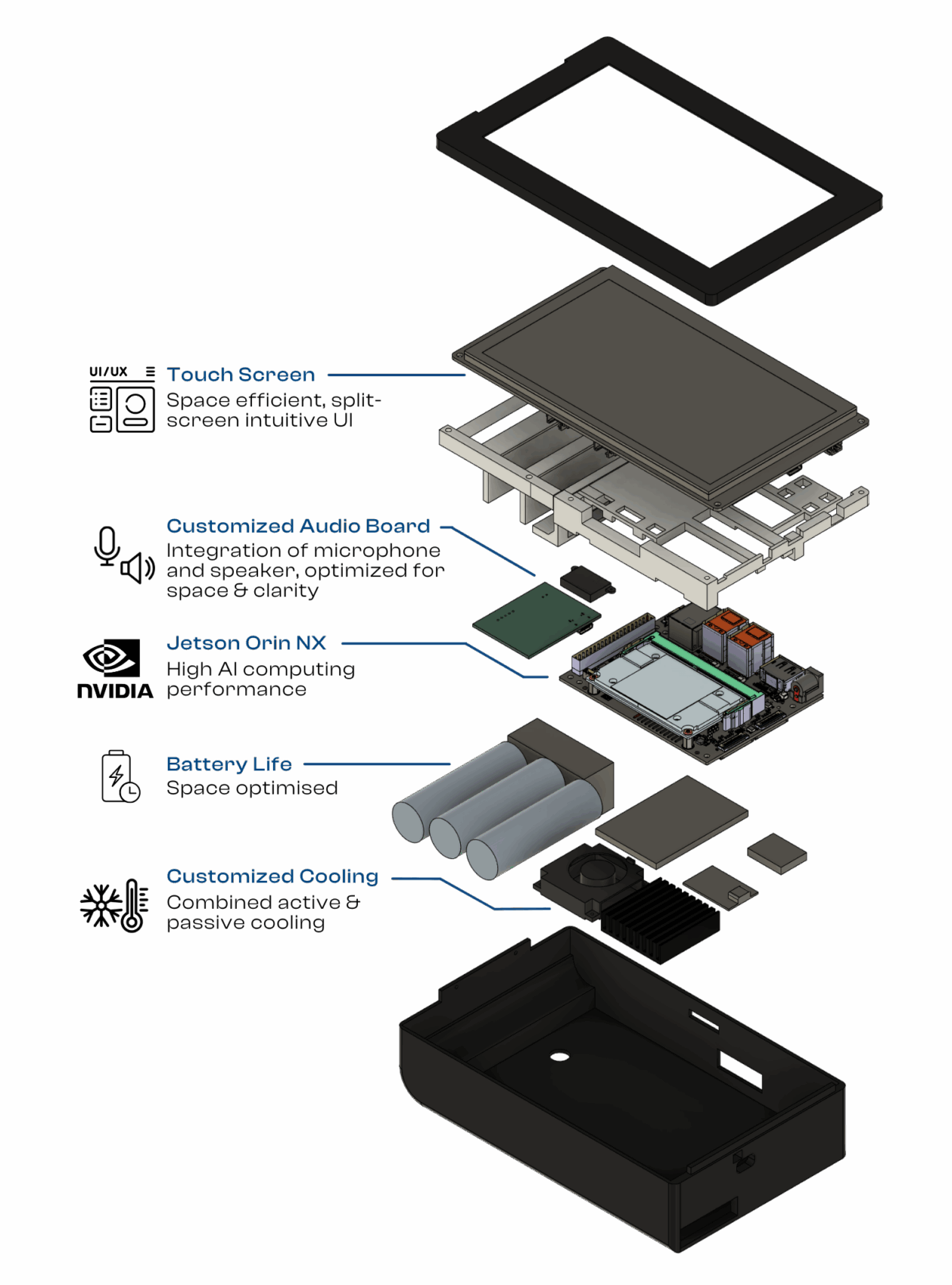

Our customized integrated hardware solution delivers a compact form factor while combining performance, efficiency, and security.

The compute unit handles intensive processing entirely offline and locally, ensuring high security without relying on cloud services or Wi-Fi. The audio board is designed for clear speech capture, enhancing translation accuracy, while the speaker ensures clear audio output. A custom cooling system maintains peak performance under demanding workloads, and the touchscreen provides an intuitive user experience. With a battery capable of 3.5 hours of continuous AI-powered speech translation, our meticulously integrated design offers a reliable, low cost, high-performance solution for seamless communication.

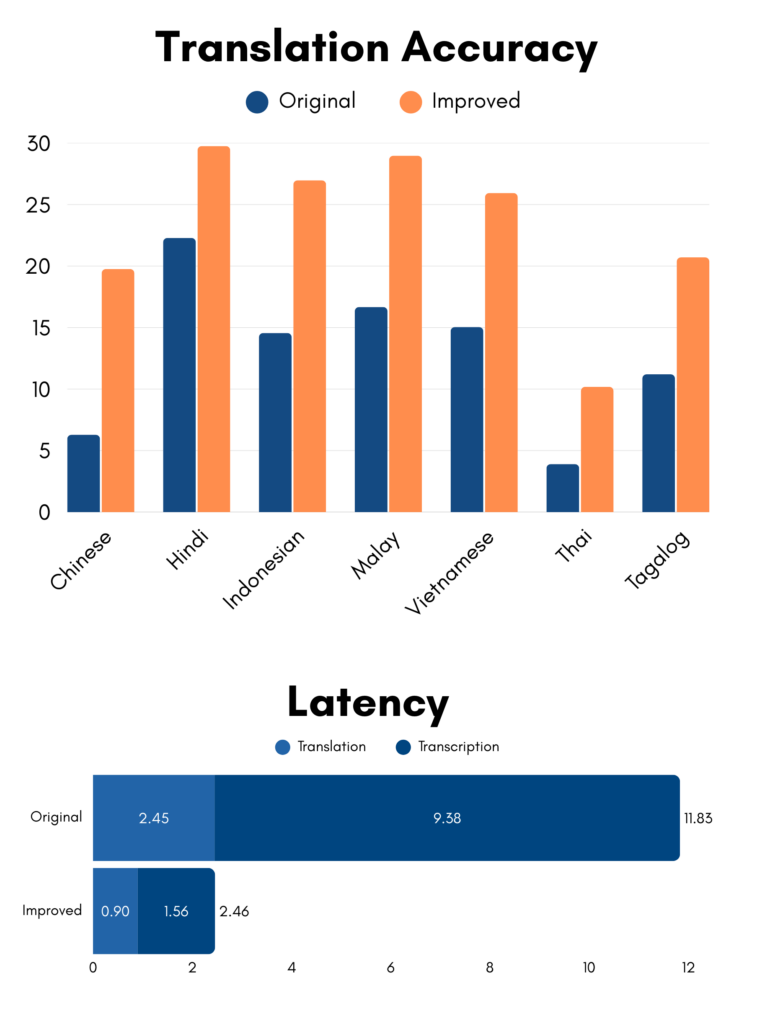

To evaluate the translation accuracy of our speech-to-speech system, we used sacreBLEU, an industry-standard metric for assessing machine translation quality. We ran tests on conversational audio data, simulating real-time input as if streamed directly from a microphone. The updated pipeline achieved a 2.25x improvement in BLEU score compared to the baseline, reflecting significantly more accurate translations. This gain is particularly important in conversational settings, where preserving meaning and nuance in real-time dialogue is critical to ensure effective communication.

Additionally, we achieved a 4.8x reduction in model inference time across the speech-to-speech pipeline, enabling translations to occur much closer to real-time. This improvement is crucial for seamless and efficient communication, especially in fast-paced conversational scenarios where delays can disrupt the natural flow of dialogue.

During user validation testing, pairs of participants used CrossTalk Secure to facilitate conversations in English and one target language. To assess perceived performance in areas such as audibility, transcription accuracy, translation accuracy, and response speed, each participant rated these factors on a scale from 1 to 5.

User ratings ranged from 3.4 to 4.4 on average, with a mean score of 3.8 out of 5—indicating generally satisfactory perceived performance.

Participants also provided feedback on ease of use and overall satisfaction through a short questionnaire, offering a broader view of subjective usability. This resulted in a mean System Usability Scale (SUS) score of 68.4 out of 100, which falls within the moderate usability range.

We would like to express our sincere gratitude to several individuals who have been instrumental supporting us throughout this ongoing project. First, we extend our deepest thanks to KLASS Engineering & Solutions, our industry mentor, for providing the opportunity to work on this project. Additionally, we would like to thank Toh Si Hui, Terence Goh, Lu Zheng Hao, Nicholas Chan and Syed Asif Bin Ascar for their invaluable help and guidance throughout the process.

We are also extremely grateful to Prof. Lim Kwan Hui and Prof. Rakesh Nagi for their exceptional support. Their technical insights and guidance were crucial in shaping the project, and their assistance in refining both the report and presentation flow was invaluable.

Additionally, we would like to thank Dr. Bernard Tan and Ms Rashmi Kumar for their meticulous review and keen eye, which helped us significantly improve the presentation and showcase material for this project.

Their collective support and expertise have been fundamental to the success of this project, and we are deeply appreciative of their contributions.

Vote for our project at the exhibition! Your support is vital in recognizing our creativity. Join us in celebrating innovation and contributing to our success. Thank you for being part of our journey!

At Singapore University of Technology and Design (SUTD), we believe that the power of design roots from the understanding of human experiences and needs, to create for innovation that enhances and transforms the way we live. This is why we develop a multi-disciplinary curriculum delivered v ia a hands-on, collaborative learning pedagogy and environment that concludes in a Capstone project.

The Capstone project is a collaboration between companies and senior-year students. Students of different majors come together to work in teams and contribute their technology and design expertise to solve real-world challenges faced by companies. The Capstone project will culminate with a design showcase, unveiling the innovative solutions from the graduating cohort.

The Capstone Design Showcase is held annually to celebrate the success of our graduating students and their enthralling multi-disciplinary projects they have developed.